SMOTE: Addressing Class Imbalance in Machine Learning

From Skewed to Balanced: Revolutionizing machine learning with SMOTE.

|

| SMOTE: Addressing Class Imbalance |

Introduction:

In machine learning, we commonly face the challenge of class imbalance: certain classes in a dataset possess significantly fewer instances than others. This disparity often results in models that exhibit bias; they grapple with accurately predicting the minority class. However, there exists one potent tool to combat this issue – SMOTE or Synthetic Minority Over-sampling Technique.

Understanding Class Imbalance:

Datasets in numerous real-world scenarios exhibit imbalanced class distributions, such as fraud detection, medical diagnosis or rare event prediction tasks. These situations often involve a vastly outnumbered positive class (minority) by the negative class (majority). The skewed distribution may impede traditional machine learning models from effectively discerning patterns within the minority class.

SMOTE:

SMOTE Definition:

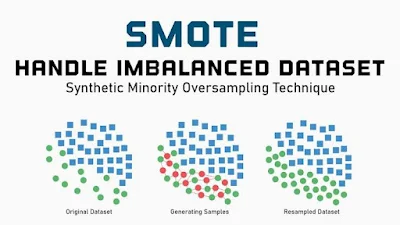

Synthetic Minority Over-sampling Technique, or SMOTE: an oversampling technique utilized in machine learning - its purpose is to redress class imbalance within datasets. By producing synthetic instances of the underrepresented class--also known as the minority class--SMOTE effectively balances the distribution across all classes; this action consequently enhances performance for machine learning models, particularly when one category vastly outnumbers another. By interpolating between existing minority class instances, we create the synthetic instances: this aids the model in learning more effectively about patterns associated with the minority class.

How SMOTE Works:

1. Identifying Minority Instances:

SMOTE first identifies minority class instances in the dataset.

2. Selecting Instances:

The selection of seed points for synthetic sample generation involves choosing random instances from the minority class.

3. Nearest Neighbors:

SMOTE then identifies the k-nearest neighbors for each selected instance.

4. Synthetic Sample Generation:

Along the line segments that connect the selected instance with its neighbors, synthetic instances manifest.

Example of SMOTE:

In credit card fraud detection, we consider a scenario where legitimate transactions vastly outnumber fraudulent ones: an important element to bear in mind. In this particular dataset--comprising 99% legitimate transactions (our majority class), and merely 1% being fraudulent (the minority class)--a machine learning model may encounter difficulty; specifically it might struggle with the effective identification and pattern-learning associated with instances of fraud.

To apply SMOTE to this dataset: we create synthetic instances of the minority class - fraudulent transactions, thereby balancing the overall class distribution. Let us suppose that a legitimate transaction exists within our data set; it also has its nearest neighbors. Along these line segments connecting the legitimate transaction with its neighboring points in space, SMOTE generates synthetic instances—a process which effectively introduces new instances of fraud exhibiting similarities to pre-existing cases involving fraudulence.

The oversampling process, thanks to SMOTE, equips the model with an enhanced ability to identify and comprehend patterns tied to fraudulent transactions; this results in a machine learning model - trained on a balanced dataset - that is more likely than its imbalanced counterpart to excel at detecting credit card fraud. The synthetic instances SMOTE generates bolster this by providing a robust and accurate portrayal of the minority class: consequently developing into a highly reliable system for detecting fraud.

Hands-On: Implementing SMOTE in R

Step 1: Installing and Loading DMwR

Begin by installing and loading the DMwR package:

install.packages("DMwR")

library(DMwR)Step 2: Loading Your Dataset

Load your dataset into R and familiarize yourself with the class distribution:

# Sample dataset with class imbalance

set.seed(123)

data <- 10="" 90="" code="" data.frame="" distribution="" feature1="rnorm(100," feature2="rnorm(100," imbalanced="" mean="10)," rep="">Step 3: Applying SMOTE Magic

Apply the SMOTE function to balance your dataset:

# Applying SMOTE

balanced_data <- .="" data="" k="5)</code" lass="" perc.over="100," smote="">Class: Column with class labels.

perc.over: Adjusts the percentage of SMOTE instances to generate.

k: Determines the number of nearest neighbors for synthetic sample generation.

Step 4: Validate the Transformation

summary(balanced_data$Class)Benefits of SMOTE:

The benefits of using SMOTE (Synthetic Minority Over-sampling Technique) in machine learning include:

1. Improved Model Performance:

By generating synthetic instances of the minority class, SMOTE actively boosts machine learning models' performance--particularly in scenarios with imbalanced class distributions; this strategy ensures that the model learns more effectively from underrepresented classes.

2. Better Generalization:

By employing SMOTE to address class imbalance, we mitigate the risk of models exhibiting bias towards the majority class. This strategy enhances the model's ability to process both classes effectively; thus, it fosters superior generalization towards novel, unseen data.

3. Enhanced Minority Class Representation:

By creating synthetic samples, SMOTE overcomes the challenge of insufficient data for the minority class. Consequently, this action produces a more balanced dataset and endows the model with an enhanced comprehension of the underrepresented class.

4. Robustness Against Overfitting:

The majority class may suffer from overfitting due to imbalanced datasets. To introduce diversity with synthetic instances and enhance the model's robustness, SMOTE serves as a preventive measure against overfitting.

5. Increased Sensitivity to Minority Class:

Imbalanced datasets often challenge models in correctly identifying instances of the minority class. However, SMOTE tackles this issue by enhancing the model's sensitivity to patterns within that underrepresented class; consequently, it boosts classification performance: an effective strategy indeed!

6. Addressing Rare Events:

Rare event-centric applications, like fraud detection or disease diagnosis, find SMOTE valuable: it furnishes the model with an adequate number of examples to learn from–even in instances where the positive class is exceptionally scarce.

7. Flexibility in Implementation:

A versatile technique, SMOTE easily integrates into multiple machine learning algorithms; its simplicity and effectiveness often position it as the preferred solution for managing class imbalance.

8. Applicability Across Domains:

In finance, healthcare, and other industries: SMOTE - a widely applicable solution; offers its benefits across diverse domains where imbalanced datasets are common.

9. Reduction of Bias:

By ensuring adequate representation of both classes during training, SMOTE contributes to the reduction of bias in models. This practice results in predictions that are fairer and less biased, especially when one class suffers significant underrepresentation; indeed, it's a pivotal solution for such scenarios.

10. Enhanced Decision Boundaries:

SMOTE generates synthetic instances that refine the decision boundary; consequently, the model distinguishes between classes more effectively and yields improved classification accuracy.

The benefits of using SMOTE (Synthetic Minority Over-sampling Technique) in machine learning include:

Considerations and Limitations of SMOTE:

SMOTE, a valuable tool, does possess potential limitations one must acknowledge. If the dataset already provides ample representation for the minority class - introducing noise is possible. Further deliberation remains crucial in applying SMOTE within specific contexts: time-series data or domains with sensitive information for instance.

FAQ: Addressing Class Imbalance with SMOTE in Machine Learning

Q1: What is class imbalance in machine learning?

Class imbalance manifests when one class overwhelmingly outnumbers the others within a dataset; this lopsidedness can introduce bias in machine learning models -- particularly towards the majority class. Consequently, performance for minority classes may fall short and remain suboptimal.

Q2: How does class imbalance affect machine learning models?

Imbalanced datasets potentially induce models to prioritize the majority class; this preference often results in inadequate generalization for minority classes. Particularly in scenarios such as fraud detection or rare disease prediction--where patterns recognition is crucial: an imbalance significantly impairs the model's capacity to identify essential trends.

Q3: What is SMOTE, and how does it address class imbalance?

A: SMOTE, an abbreviation for Synthetic Minority Over-sampling Technique, serves as a method to tackle class imbalance by synthesizing instances of the underrepresented group; it achieves this through interpolation with existing examples - thus presenting more balanced representation. Consequently - owing to increased exposure and diversity in training data- the model enhances its capacity for learning from minority class samples.

Q4: Why is SMOTE important in machine learning?

SMOTE, an essential tool in machine learning: it mitigates the impact of class imbalance and enhances prediction accuracy across all classes. By creating a balanced dataset--thus preventing models from skewing towards the majority class--it proves indispensable for graduate-level modeling tasks.

Q5: How do I implement SMOTE in R?

Implement SMOTE in R with the `DMwR` package: load your dataset; subsequently, employ the `SMOTE` function—taking into account parameters such as the target class column ('Class'), oversampling percentage ('perc.over'), and number of nearest neighbors ('k').

Q6: Are there any potential drawbacks to using SMOTE?

SMOTE, though effective in numerous instances, may inject noise into the dataset due to its generation of synthetic instances; furthermore--its performance could vary: this fluctuation hinges upon specific characteristics inherent within our dataset.

Q7: Can SMOTE be applied to any machine learning algorithm?

Indeed, SMOTE—an acronym for Synthetic Minority Over-sampling Technique—is algorithm-agnostic; thus, we can apply it to a variety of machine learning algorithms: decision trees, support vector machines, and neural networks--to name just a few. Its focus lies not in being tethered to any singular model but rather on dataset balancing.

Q8: Are there alternatives to SMOTE for handling class imbalance?

Certainly; other techniques - namely, undersampling, cost-sensitive learning, and ensemble methods - can address class imbalance. The method selection hinges on two key factors: the dataset's characteristics and the specific goals of our machine learning task.

Q9: Does SMOTE guarantee improved model performance?

SMOTE, while it can enhance model performance significantly on imbalanced datasets; may exhibit varying effectiveness: this is contingent upon the characteristics of the dataset. Hence–one must evaluate SMOTE's impact through meticulous validation and rigorous performance metrics.

Q10: Where might I discover resources for expanding my knowledge on SMOTE and addressing class imbalance?

You have the opportunity to delve into academic papers, online tutorials, and documentation specific to the `DMwR` package in R. Furthermore, numerous machine learning books offer extensive coverage on imbalanced dataset management; they elucidate a variety of techniques – among them is SMOTE.

Conclusion:

Understanding and implementing techniques such as SMOTE--a powerful method for addressing class imbalance in machine learning--, offers a practical solution to challenges correlated with skewed datasets; as the demand escalates for models that are both accurate and fair, this comprehension becomes indispensable. The construction of reliable and effective machine learning systems hinges on mastering these advanced strategies.

Comments

Post a Comment